ACROSS VOIDS: AN INTERACTIVE EXPERIENCE ON GRIEF

WORK BY DR NORAH LORWAY, SWCTN IMMERSION FELLOW

INFORMATION DOCUMENT NORAH LORWAY

WHAT

Across Voids is an immersive experience which explores how AI and Immersive technologies can help support the grieving process. The video of the work you are seeing is a stereo rendering of the multichannel live performance of the work at the University of Birmingham’s BEAST FEAsT surround sound festival (May 2019) and the NHS Cornwall Partnerships “Artificial Intelligence” event (April 2019).

WHY

Grief is often discussed in terms of how it can be cured instead of (perhaps more realistically), how it can be supported (Hughes 2011). Immersive technologies, such as VR, have been shown to help create a healthier mind through examining our virtual selves (Georgieva 2017). By using these technologies with individuals experiencing grief, perhaps support and healing can be provided on an ongoing basis, not just at the beginning of a person’s grief journey.

The work is informed by the following; SuperBetter – a game by Jane McGonigal; Way to Go – a web experience by AATOAA studios in Montreal; Deprogrammed, a meditative virtual walk experience by Mia Donovan. It is also informed by CS Lewis’ a Grief Observed.

HOW DOES IT WORK?

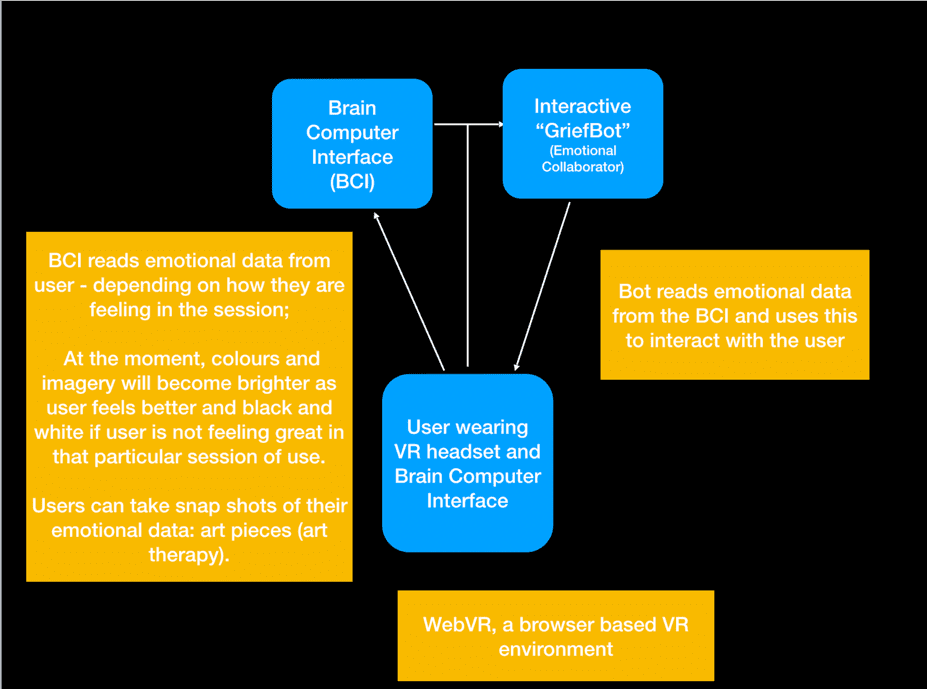

This experience can be seen as a sort of “emotional fitbit” for helping users explore their own experience with grief and loss; the user wears a Brain Computer Interface (BCI) + VR headset (such as the HTC Vive) and is able explore the experience at their own pace, using webVR. The user’s emotional output is being read via electroencephalogram EEGs from the BCI and fed into the experience: brighter colours output = happier mood; darker/white colours = neutral emotions and so on.

The output is then fed into an interactive GriefBot, which responds to the emotional output and chats with the user, acting as a guide throughout the chosen duration of the experience.

The project can be broken down into the components described in Fig. 2.

Below, you will find an explanation of each component and its use within the experience.

a). AUDIO AND VISUALS

The experience uses 360 degree video and spatial sound audio.

The audio was created using the SuperCollider audio programming language. I built a custom software tool which allows for surround sound using Vector Amplitude Panning (VBAP), a sound engineering technique which creates 3D sound. Visuals were created in Unity.

b). BRAIN COMPUTER INTERFACE (BCI) and GRIEF BOT

When you are grieving, often you feel out of touch with your own emotional state and thus can be hard to self analyse what you might be feeling from one moment to the next. In this experience, a BCI, a direct communication pathway between a brain and an external device, is used to measure electroencephalogram (EEG) data which is then further classified into different emotion states by means of EEG based functional connectivity patterns. Three connectivity patterns indices were used to calculate an estimate brain functional connectivity in these EEG signals: correlation, coherence and phase synchronisation. I then converted the patterns into readable data which feed into the bot who then feeds back through a conversation with the user (Savin-Baden 2019) This creates a collaborative, fluid interaction between the bot and user, promoting further immersion into the experience.

For those interested, the code for both audio, visuals and the bot will be available through GitHub early 2020. For more info please visit norahlorway.com

WORKS CITED

Fosch Villaronga E. 2019. “I Love You,” Said the Robot: Boundaries of the Use of Emotions in Human-Robot Interactions. In: Ayanoğlu H., Duarte E. (eds) Emotional Design in Human-Robot Interaction. Human–Computer Interaction Series. Springer, Cham

Georgieva, Iva. 2017. Trauma and Self-Narrative in Virtual Reality: Toward Recreating a Healthier Mind. Frontiers in ICT. 4. 10.3389/fict. 2017.00027.

Hinrichs H, Machleidt W. 1992. Basic emotions reflected in EEG-coherences. Int J Psychophysiology. 13: 225–232.

Hughes, V. 2011. “Shades of Grief?: When Does Morning Become a Mental Illness?” Scientific American. June 2011.

James W. 1884. What is an emotion? Mind 9: 188–205

Murugappan M, Nagarajan R, Yaacob S. 2010. Classification of human emotion from EEG using discrete wavelet transform. J Biomed Sci Eng 3: 390– 396

Sammler D, Grigutsch M, Fritz T, Koelsch S. 2007. Music and emotion: Electrophysiological correlates of the processing of pleasant and unpleasant music. Psychophysiology 44: 293–304.

Savin-Baden, M. & Burden, D.2019. “Digital Immortality and Virtual Humans”. Postdigit Sci Educ 1: 87. https://doi.org/10.1007/s42438-018-0007-6

Schmidt LA, Trainor LJ. 2001. Frontal brain electrical activity (EEG) distinguishes valence and intensity of musical emotions. Cognition Emotion 15: 487–500