At the start of the fellowship, my plan was to run a series of participatory Deep Listening Walks along the River Frome, a practice outlined in a previous blog post HERE. This was put on hold due to the pandemic, so I sought out other methods to engage with non-deterministic processes; to create conditions for improvisation, and for ideas and processes to emerge. I decided to find a way to collaborate with the river, aiming to not be fully in control of the outcome, and to embrace some of the agency of the river, as reflected in my initial fellowship proposal to ‘re-wild water data’, outlined HERE.

I started by listening to the underwater soundscape of the river through hydrophones and I found myself wading right in, to listen to different areas. Small bits of algae, river plants, and sediment from the river stayed with me when I waded out. I decided to bring these river materials into dialogue with 16mm film.

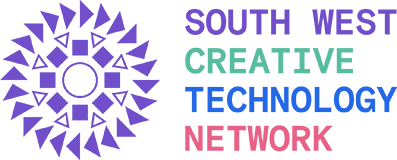

In the dark, I laid out the sediment and matter from the river onto film, and flashed a light on and off. I then developed the film using the eco-processing recipe Caffenol, a mixture of instant coffee, vitamin C and washing soda. (Thanks to Vicky Smith at BEEF for showing me this method).

Physical traces were generated through a direct encounter between the material qualities of the river particles and the film itself, resulting in a photogram film. Sound is generated through the exposure of the optical soundtrack of film, which provides another opportunity for listening, this time to the shadows and traces of the river particles.

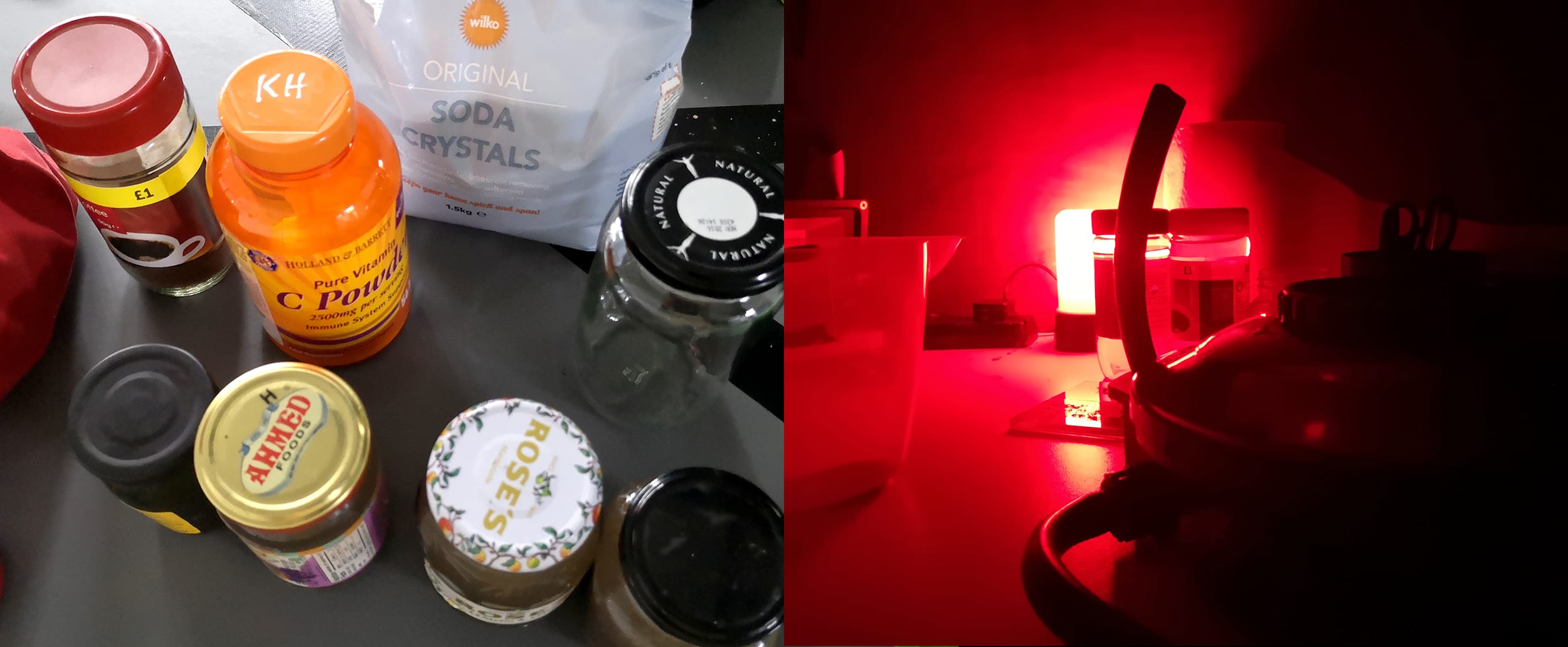

Film is sensitive and responds to small changes. Layering wet materials directly onto film starts interactions with the emulsion in tandem with many other layers of indeterminacy and surprises. This time in the darkroom involves long durations of patiently waiting in the murky darkness illuminated by a dim red light. I am reminded of the Deep Listening walks, and sense a connection with the way these durational tasks highlight a non-visual sense and thus intensify my focus.

The first public outing for this film was at MUTEK Montréal, September 2020, in their online exhibition “Distant Arcades” as a 3 minute digital edit from a scan of the material I had created so far. The soundtrack combined hydrophone recordings with the optical soundtrack of the analogue film, recorded from the 16mm projector.

OPTICAL SOUNDTRACK : the final soundtrack on a motion picture, which appears as a band of black and white serrations along a strip of film to the left of the composite print. Light is shined through the serrations and is converted to audible sound.

Definition from dictionary.com

The next outing was reconfigured for Centre of Gravity as part of BEEF’s ‘Department of Moving Images’ group show sited in the former Gardiner Haskins department store in Bristol city centre alongside an amazing array of work from Bristol artists. I responded to the idea of the department store and our collective approach to salvage and reuse fittings and fixtures discarded in the empty building. I created a ‘shop display’ of 9 monitors, each looping a different section of the 16mm film, accompanied by a hydrophonic soundscape. The short films and soundtrack overlap and layer in constantly different ways creating a non-repeating sequence. The River Frome is culverted under Bristol city centre, and flows hidden underground not far from Gardiner Haskins.

Following this exhibition, I continued to work on the film by generating more material and edited it on a steenbeck flatbed editor using ‘cut and splice’, a completely different process to digital non-linear editing I have previously been accustomed to.

For a BEEF event at the closing of the exhibition, I made a live performance with the film, by adjusting the speed of the projector live, and mixing the optical soundtrack with hydrophone recordings from the river.

I had this longer edit of the film scanned to make a digital version, however, this scan does not include the optical soundtrack. I was curious to find alternative methods to re-create the optical ‘soundtrack’, whilst also being able to manipulate the speed of the playback. I collaborated with audiovisual programmer Matthew Olden, who built a MAX patch to convert the light and dark areas of a vertical stripe of the film into an audio waveform. By working with this translation as a digital process, many other possibilities arise. The ‘stripe’ can be read anywhere along the film (not just at the edge), and this ‘reading’ can then be applied and mapped in multiple ways. Matthew incorporated a filtering EQ to read the film in 8 vertical segments, to map the overall brightness of each segment to the ‘gain’ of each frequency band of an EQ. In future I will incorporate this into the live performance set-up, so I can mix between the analogue optical sound track, the digital reading of the film (from a live camera on the projection), and hydrophone recordings. Below is a digital scan of the 16mm film, with a soundtrack created using the MAX patch.